And neither is this. In fact, it lost some of the lovely detail even reproducing it at this resolution. But I like it anyway.

Just because, part seven

Just a quick one before the day closes, an image I got this morning while trying (and failing) to capture a bird in a treetop illuminated by the first orange rays of the sun. I had turned towards the sun peeking through the trees and was dodging back and forth, hoping to find something perched that I could silhouette against the light. As I moved about, the nearby holly bush caught my eye, and I shifted my focus much closer. Came out much better than expected, with just a smidgen of indirect light shaping the leaves.

But it’s still not art.

More pics from today coming soon.

An open letter

This post was fostered by an exchange on Panda’s Thumb and simply needs to be said. This is an open letter to everyone who might call themselves devoutly religious, creationist, conspiracy theorist, new age or alt med supporter, paranormal or psychic believer, and so on. Or anyone that has ever used the phrase, in any form, “Science doesn’t know everything.”

It’s simple. Science has nothing to prove to you. You do not get to dictate what science is, or how it should present any evidence at all to you. And the reason for this, very simply, is that science works.

Science is a methodical process of learning, and within that method sits several distinctive functions that make us, as sure as we can humanly be, confident in our results, even negative results (which are just as important, if not more so, than the positive ones.) It is these results which ‘prove’ anything and everything that is routinely accepted as the noun, “science,” and when it comes right down to it, that’s the only thing that is needed.

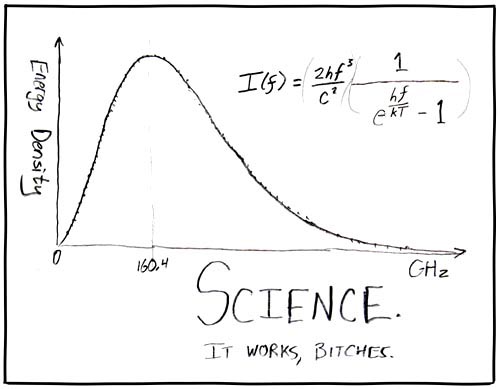

Randall Munroe of xkcd, very early on in his prolific webcomic career, put it very succinctly:

He left it up to the reader to determine what that graph and equation showed, which is part of the comic’s charm; to get the point, one has to do a little legwork. I am going to undermine this a bit, with some misgivings, by revealing that this diagrams the Cosmic Microwave Background – the leftover energy from the Big Bang theory of the origin of the universe, which is largely based on the various redshifts of the stars we can see throughout the sky. One of the consequences of the theory was that, if true, we should be able to see very faint effects of the cooling that would have been taking place from the initial high-energy state, as the energy dissipated over nearly 14 billion years. Given that time frame, this energy should be at a certain level; the problem was, we had no way to detect such low levels of energy when it was proposed, and the prediction was shelved and almost forgotten for sixteen years. A couple of radio astronomers detected the energy by accident in 1965 and could not determine what it was – the dots were connected after they brought it up to physicists at Princeton University. In other words, no one was looking for it, but found it anyway, right smack where it was predicted to be.

That’s just one of the myriad reasons why we use science, and why it has proven its value as a process. So please understand that when you claim that science has something to prove to you, you’re not just wrong, you’re both self-centered and arrogant (which may explain why you get treated as such.) Nobody really cares that you have a preference for some state of affairs, or an emotional involvement, or simply don’t understand therefore believe that science must be wrong. Your assertions, weird attempts at logic or philosophy, or selectivity over what you decide should be ‘evidence’ means nothing at all to anyone who knows why we use science. And that ‘why’ is: because to be useful it has to perform some function.

There’s a reason for this. Throughout human history, there have been millions upon millions of ideas, attempts to explain something that we witnessed. The vast majority of them were completely and utterly wrong, and the most distinctive lesson we can learn from that is how easy it is for us, as a species, to be wrong. I can predict that numerous people, who this letter is addressed to, will seize upon this as exactly their point, except that science has already gotten there ahead of you – hundreds of years ahead of you. Everything in science revolves around the simple recognition that some idea might be wrong, and in order to rule this out, we have to take pains to test it carefully. I wish the same efforts were demonstrated by everyone who denigrates science, or any aspect of it, but if that were the case there would be no point in this letter.

Most importantly, any explanation, any theory or hypothesis, any idea at all, has to lead somewhere to be anything more than an idle thought. Aliens are visiting our planet? Fine. Who are they, how did they get here, where did they come from, and what do they want? When you think you have an answer to those, then demonstrate how you know you’re not wrong. Your natural remedy is effective? Fine. How many people did it fail to work for? What do you mean, you never counted? How do you know it works, then? For any positive results you want to quote, how do you know something else wasn’t working instead? God created the universe? Fine. Who is this god, where does it live, why does it do anything, and how do you know this? Oh, you have a book? Very nice. I have several hundred – what’s your point? You do realize that millions of people before you all had their own books, and stories, and folklore, revolving around the sun and the moon and the seasons and the natural disasters – obviously their books and stories didn’t really lead anywhere, so you need to go at least that extra step to show that you’ve got a more useful idea than they had. And if your answer runs along the lines of how “everything” proves a god, which god is it proving, and how did you rule out this being the Aztec or Aborigine gods?

Double-standards that border on hypocrisy are not what any adult should be guilty of, and that’s exactly what I’m referring to here. If you think some scientific standard (or law, or theory, or whatever) isn’t working, then explain how your own preference works better. This is a minimum standard for competing scientific theories, so your superior idea should surpass this; if you haven’t bothered to even contemplate this, you deserve whatever response you receive. Finding some fault with scientific knowledge isn’t enough, not by a long shot – you can’t logically assume some other idea ‘by default.’ You need to explain not only whatever little point of evidence you’ve selected, but all of it. If it’s right, then it’s right across the board, so this should be extremely easy to demonstrate.

You might be the type that caught how the word ‘prove’ was in quotes in the third paragraph, and there’s a simple reason for this: nothing is even proven beyond the shadow of a doubt – all we can work with is the preponderance of evidence. The quotes were recognition of this fact, a bit of bare honesty that reflects how our knowledge must work. The polar opposite of this is assertion, where anyone who doesn’t know and has no way of knowing makes definitive statements anyway, even when the concept they’re promoting relies on explanations of why we cannot see the evidence we should expect – you know, like supernatural existence, or the government is hiding the technology, or cynics can suppress the effect, and so on. Anyone whose favorite concept requires excuses has no call to question any process where supporting evidence is meticulously tested.

Now for a big one. When thousands of educated people the world over are using some aspect of science on a daily basis, the chances are ridiculously, infinitesimally slim that the fatal flaw that you believe you know of did not occur to any of them, ever. So at the very least, have just a touch of humility and ask anyone if they’ve considered your idea, before you triumphantly announce you know just what’s wrong. Otherwise, once again, you deserve whatever nasty response you receive, and to be honest, probably much nastier than that. While it is certainly true that aspects of scientific knowledge have gone through corrections in the past, the people who produced these corrections were more than a little knowledgeable in the field to begin with; nowhere to be found is a correction from someone who wouldn’t pass a first-year exam in the subject. That’s the kind of stuff found in comic books, not real life. In short, it took science to correct the flaws in science – not revelation, not inspiration, not emotional surety, not astrological readings, not anecdotes from convention attendees. Not ever.

If at any time your argument includes something even vaguely related to, “It’s possible,” you’re only demonstrating the lack of evidence that you possess. Possibility is too vague to have any value – physically it means there is nothing known to prevent it, and philosophically it means everything that can be imagined, neither of which can be said to narrow down the field. For everything claimed to be ‘possible,’ it is also possible that it doesn’t exist, so as an argument it’s solely an appeal to a single perspective. Demonstrate what’s probable, and how much so, to even begin to impinge on functionality or value.

Closely related to this is the frequent selection of exceptions, such as where some scientist was actually wrong, in utter dismissal of the vast percentage of cases where science works exceptionally well. Everyone and their brother, of course, believes that they’re the lucky or special one who will be that exception, which is exactly why Las Vegas exists. Yet focusing on exceptions does nothing whatsoever to demonstrate the value of any alternate proposal; again, it’s simply an appeal to a single perspective, which demonstrates (again) the lack of something more convincing.

You are welcome, of course, to hold whatever you want to believe as a personal preference, and no one has a right to challenge that. But this should not be used as a shield when someone doesn’t agree with you. If you thought it was important enough to post about, or inform your opinions on policy, or guide your decisions in child-rearing, then you’re obviously interacting with others and we’re well out of the realm of personal choice; you are now obligated to demonstrate your reasoning. If you can’t, you should expect to be chastised or ignored. And kindly do not resort to the tired old, “People need to respect my opinion” argument, because they don’t; respect is the recognition of value, not a right that anyone has solely for existing. You get respect by earning it, and to do that, you need to convince others of the value behind your beliefs. Or, you can keep them to yourself, which is the only state that can accurately be called, ‘personal.’

If you’re not convinced, that’s fine – there’s no requirement that you should be. Science races along, believe it or not, without your support. But nobody owes you anything, and no one is obligated to try and swim upstream against your cherished beliefs. You’re not that important, and your attempt to pit your beliefs against the world has meaning only to you. If you honestly want to know, the information is readily available and very often free-of-charge. What it takes to comprehend it, however, is the honest desire for knowledge, emphasis on honest. If all you’re trying to do is shore up your own beliefs, well, that’s just insecurity, and solely your own problem.

The days of yore, part two

Okay, so, I had this idea a couple of weeks ago, to feature an image from the summer solstice on the day of the winter solstice – kind of a callback to nicer weather, and a reflection of that little archive list on the sidebar, right? Yeah, so, first, I had to stick to digital images, since over a decade of slides in my stock are only dated by the month and year I got them developed, so no help there (no, I don’t keep a shooting journal.) But then it became clear how rarely I was out and shooting subjects on the summer solstice. I can shoot a lot in the summer, but it’s not every day, and it appears that June 21st is just one of those days when I didn’t.

So while I had intended to go back to a previous year, I ended up with just six months ago; the solstice fell on June 20th this year.

Doing artsy stuff with insect photography is a little difficult, since combining a photogenic insect, a useful pose, and a decent setting into something appealing is rather demanding. This is one of my few examples, and nothing that I consider high art, but you know how I feel about it anyway. Yet it also illustrates something else, which is where light becomes an issue with such work. This image is natural light, taking advantage of the sun angle to bring out the textures of the leaf, but this required a slower shutter speed and wider aperture than I would normally have used to capture sharp detail, and it produced some interesting focus effects. Notice how the leaf is unfocused in so many areas, but sharp in a vertical stripe under the fly. This basically means the leaf in that area was the same distance from the camera as the fly.

We’ll take a quick look at closer range, too, mostly to show off the color of the eyes. It’s not that this is especially interesting, though I could call this a product of my Green Phase if I was inclined to be pretentious. But insect eye color, and some bird feathers, often depends on light angle rather than inherent pigmentation. I have often noticed that the throat color of ruby-throated hummingbirds (Archilochus colubris) doesn’t show up at all with direct flash, making it even more involved to try and get images that display this color. But I also have several arthropod images where the eyes take on a rainbow hue depending on the angle of the strobe. And so it was with this example, as I switched to augmented light for further images.

We’ll take a quick look at closer range, too, mostly to show off the color of the eyes. It’s not that this is especially interesting, though I could call this a product of my Green Phase if I was inclined to be pretentious. But insect eye color, and some bird feathers, often depends on light angle rather than inherent pigmentation. I have often noticed that the throat color of ruby-throated hummingbirds (Archilochus colubris) doesn’t show up at all with direct flash, making it even more involved to try and get images that display this color. But I also have several arthropod images where the eyes take on a rainbow hue depending on the angle of the strobe. And so it was with this example, as I switched to augmented light for further images.

It might be hard to believe, but this is the same fly (probably genus Condylostylus) in the same setting, less than a minute after the one above. Here all of the light is coming from the camera flash, on a bracket placing it almost directly above the camera – check the position of the shadow for the best guide to where the light was coming from. Not only does the fly not appear to be as green, as the grey legs and lower thorax show up clearly, but the eyes have become orange; I wish I could give a succinct description of why this is, but there’s numerous factors that can contribute, so maybe I’ll tackle this in a later post. Notice that the leaf also takes on a rattier appearance, the lighting seems a bit harsh, and the background has dropped into darkness.

This is one of the benefits of having a flash bracket that allows a lot of position changes for the strobe. Different angles produce different effects in the image, and while you have a cooperative subject, it’s often worth the effort to try moving the strobe around a bit. It also helps to know what light does, especially with high and low contrast, diffuse light (like from a softbox) or bright and direct, and even multiple lighting or supplemental reflectors. Some forms of lighting can produce strange highlights, or be reflected in the subject in some way. I’ve known portrait photographers that do very close work who may put a single cross of black tape across the middle of their softbox diffusers; when it is reflected in the eyes of their subjects, it takes on the appearance of a window with four panes. By the same token, square or oddly-shaped diffusers can sometimes produce curious reflections in macro subjects.

Anyway, not only have we successfully thwarted Armageddon yet again, for those of us in the northern hemisphere we’re over the hump and the days are getting longer now. I’m personally more psyched when the first plants start budding out and I can get into my photographic stride, but this is a good reference point anyway. Maybe someday we’ll go back to calendars that reflect the solar year much better.

Minor updates

While I pay no attention to the news, I’m still hearing about the impending winter storms across much of the US, and this coincides with one of the posts from two years ago in the sidebar. So, while I was doing some of the year end updates on the calendar and such, I decided to add two pages to the Tips Gallery. Should you be among those who witness a winter storm and still want to get out and photograph something, there is now a page on cold weather photography available. This actually contains more info than the original post.

For all the southern hemispherans, there’s a page on hot weather tips as well. Even if you’re not from the southern half of the planet, you can still go there and reminisce about sweltering days.

The calendar has been mostly updated now, and the page on how to create your own guide to local sun & moon rising & setting times.

One more page is on the way, but I have to stage some more photos for it so it’ll still be a few days yet. It’s relevant to about half of the posts that I make here, so if you’re still around, you stand a good chance of finding it interesting. Stay tuned.

Changing perspective

I just find this amusing. The Girlfriend, like probably 85% of the world’s population, isn’t terribly fond of bugs, most especially not the big ones. But she’s watched me pursue numerous arthropodic subjects, and still finds fascination in the details revealed from macro work.

The net result of this is seen here, what I’m fairly certain is an Acanthocephala declivis, a variety of leaf-footed bug. The specimen is 30mm long, so not at all a small bug. The Girlfriend spotted it while out shopping one cold evening, sitting motionless on a sidewalk (the bug, not her.) Instead of stepping around (or on) it, she hunted about until she found a discarded drink cup, scooped it up, and brought it home for me to photograph. She had no knowledge of what it actually was, no idea of defensive mechanism or potential nastiness, but she knew I’d like it. Not to mention that it’s no longer the season for such subjects and my photography is slowing down commensurately, so I’m happy to have something to work with.

The net result of this is seen here, what I’m fairly certain is an Acanthocephala declivis, a variety of leaf-footed bug. The specimen is 30mm long, so not at all a small bug. The Girlfriend spotted it while out shopping one cold evening, sitting motionless on a sidewalk (the bug, not her.) Instead of stepping around (or on) it, she hunted about until she found a discarded drink cup, scooped it up, and brought it home for me to photograph. She had no knowledge of what it actually was, no idea of defensive mechanism or potential nastiness, but she knew I’d like it. Not to mention that it’s no longer the season for such subjects and my photography is slowing down commensurately, so I’m happy to have something to work with.

Both images here are ‘studio’ shots, indoors with a cut stem from the forsythia bushes and a photo print of mine as the background; the lighting is a basic studio strobe primarily overhead, with a little slave strobe filling the shadows. There is a lot to be said for using lighting that runs from AC power, because otherwise I’m working the hell out of my rechargeable batteries.

Anyway, if your Significant Other isn’t really into your photographic pursuits, be patient – you never know what might change. I have to admit that The Girlfriend still isn’t up to going on bug hunts with me, and won’t act as a snake wrangler. Yet.

This one goes to twelve

Hey, I figure I had to post something precisely at 12:12 am on 12/… aw shit.

The Ghost of Christmas Past

Sorry. Couldn’t resist.

But at least I waited a day just to thwart all that goofy ‘Caturday’ stuff…

The ethical responsibility of scientists

Sometimes, we humans get a cultural belief or concept stuck in our collective heads, something typically called a meme, and therefore think that this has importance solely because it’s repeated. Some sociologists have expressed the idea that this is exactly what culture is, and there’s an interesting theory that memes actually go through a process of natural selection. That’s not what I’m addressing here, though; what I’m examining is one of the more prevalent ones, which is, “Do scientists bear the ethical responsibility for those things that they create or discover?”

While this came into vogue very strongly with the advent of “the nuclear age” and the detonation of the first atomic bomb, it’s actually older than that, and examples abound throughout history. Before the Enlightenment, scientists were occasionally persecuted, and prosecuted, for daring to present evidence that went against church precepts; there’s a famous cartoon illustration of Darwin as an ape; and Mary Wollstonecraft Shelley may have introduced the idea of the ‘mad scientist’ with her Frankenstein book, tellingly subtitled The Modern Prometheus. Science fiction literature toyed with the subgenre, but it was the motion picture industry that nurtured this idea into some kind of supposedly-poignant social commentary.

That last phrase may sound a little derogatory in nature, and it’s intended to be. The viewing of technological achievement in terms of the warfare or aggression that it may assist is quite sparse – save for how often ‘scientists’ receive this castigation. Most notably is how often the event mentioned previously, the creation of the fission bomb, is considered the pinnacle of shortsightedness, but we see plenty of the attitude today with the frothing rants about genetically modified ‘Frankenfoods.’ I have yet to see anyone bitching about gunpowder or the machine gun, napalm or submarines, catapults or the jet turbine – even when all of these were developed with warfare in mind, and most of them still serve that primary purpose.

Overall, however, the attitude seems to be that, before developing any new understanding of energy, or even biology, that scientists bear the responsibility of predicting just what use anyone in the future may wish to put these towards. Scientists, it would seem, are expected to be both prescient and complete in their understanding of human nature, or stop their investigations into physics entirely in favor of… well, who knows? Underlying this would seem to be the idea that technology itself can be good or bad in nature, a mistake far too commonly made.

It’s easy to see atomic weapons in this way, provided that one thinks in childlike terms anyway. The nasty effects of radiation sickness complement the wholesale destruction of which these weapons are considered capable, usually in ignorance of several important factors. The first is that, the weapons have only been used twice, three days apart, over sixty years ago. The second is that, in terms of destruction, countless bombing campaigns in the same war produced exponentially higher effects – it’s really hard to argue that one bomb is somehow more irresponsible than a handful that produce the same effect.

But the biggest two factors have the most bearing and are completely ignored. The development of atomic weapons within the Manhattan Project was in direct response to the same efforts being made in Germany, and were specifically undertaken to ensure that the Nazis were not the only power to possess the capability. The attitude about science suddenly takes on different meanings when it’s contemplated in terms of both nationalism and protection, and most of the scientists working on the projects left high-level positions in research to ‘serve their country,’ something that we find commendable when it’s undertaken by 18-year-old recruits with no developed sense of ethics nor understanding of world conflict. That Nazi Germany fixated on the wrong approach and achieved nothing is not the fault of anyone who actually succeeded.

The second important consideration regarding the use of these weapons requires another bit of perspective. While the Third Reich had collapsed, Japan was continuing its efforts unabated, and showed every sign of pursuing its goals until it achieved either Pacific dominance or total destruction; the cultural attitude of Japan in that time was well-known, and was frequently displayed. Japanese soldiers did not surrender: surrender was more shameful than death. The bombing of Nagasaki and Hiroshima were demonstrations that destruction was going to be the only outcome if this outlook was maintained. Important here is that this gambit worked; the alternative would have been numerous raids on the Japanese mainland that would have resulted in many times the casualties, including among the Allied Forces. The bombs brought about peace in the most effective method available to the Allies at the time, and immediately, too. They have not been used since. So, how does this place the ethics of the creators?

It bears noting that J. Robert Oppenheimer, head of the Manhattan Project, was openly distressed about both the potential of the weapons and his own hand in them, perhaps indeed recognizing that political figures haven’t always been the best judges of how such power should be used. On the other hand, Edward Teller, who was instrumental in multiplying the released energy for the subsequent fusion bomb (a distinction worth knowing,) campaigned strenuously for the peacetime uses of such processes, not as weapons but as tools. Whether he did this to cement his name as father of a new approach to energy, or through his own guilt over the destructive nature of atomic weapons, is openly speculative. And I’ve remarked before about the possibility that atomic weapons actually reduced the amount of warfare in the latter half of the twentieth century.

And then we come to the other example I opened with, that of genetically-modified organisms (usually meaning grains,) or GMO. The attitudes about this are all over the map, exacerbated by the largest amount of ignorance and misinformation that we’ve seen since religion became popular, but again, nearly everyone who speaks of the horrors of such activities puts the blame on scientists. First off, the inability to distinguish between genetic research and corporations that market new genetic strains has a lot to do with this, and shows a stunning amount of ignorance. Much higher levels of ignorance, though, are demonstrated by the numerous espoused perils that are either rumors or strictly imaginary, never evidenced in any form; fear-mongering raised to cult status. That virtually everyone who rants about GMO cannot even begin to describe what it entails is embarrassing for our species, to be honest.

Let’s get a couple of things straight. Genetic modification has been taking place since the dawn of life – that’s close to four billion years. Directed genetic modification has been taking place for the last several thousand years, once humans noticed the patterns that were visible during reproduction of domesticated animals and plants, and began experimenting – every dog or horse, every food crop or decorative plant, is the result of human-directed genetic modification. And we do indeed see the horrors of this, especially in animal breeds with serious health and physiological issues. Most of them we openly ignore because we want pugs with short noses and spindly-legged horses for racing, but the bare truth is, nature would have weeded these issues out.

GMO is the result of recognizing how portions of the gene contribute to certain traits, and thinking that we could probably keep the good bits without having to deal with the bad ones. Instead of breeding together two examples of wheat with preferred traits, and having to retain the flaws they had at the same time, the genes are spliced in fragments to achieve just the selected traits. Natural selection can and does accomplish this all by itself, but much slower. Nor are the genes that are spliced some kind of chemical creation or nuclear mutation, but simply portions of already existing genes – traits that nature already produced. The basic premise is, “Pick the best, leave behind the not-so-good” – the same exact attitude displayed by everyone in the produce department of their grocery store. Obviously, if we can increase the number of ideal examples and minimize the poor ones, harvests are more productive, land is more efficient, and the energy of farming is optimized.

Are there unintended consequences? Sure! The same can be said for everything that humans do, so if this is an issue, lock yourself in a dark closet and try to ignore the consequences of that. But are these unintended consequences going to result in mutations that somehow take over, or turn into something dangerous, or whatever the imagined fears of activists might be? No. Pure and simple, no – because that’s not how genes or mutations work, even remotely. If it was, we’d be seeing it from nature all by itself. There are real concerns, for instance, that pestilential insects or microbes will quickly develop new strains in response to modified crops – but that’s true regardless, and again, they’re not going to become superbugs. The concern is over the short effectiveness of any new crop against the amount of time and effort to develop it. The protestors who bring up issues such as antibiotic resistant bacteria in hospitals, introduced species harming the ecological balance, or the corporate tactics of Monsanto, are talking about entirely unrelated topics – ones that exist with or without genetic modification.

Throughout all this, and many other instances besides, sits this strange assumption that scientists are somehow another species or something, perhaps a secret cabal – one where every scientist has the same attitude, approach, and goals. Therefore, one can talk about ‘scientists’ as a collective body, instead of it being a ridiculously broad term for individuals who do research. Yet every one is solely human, and within this broad category sits every attitude and mindset that we find in every other walk of life – and competence and failings too. Then we have the assumption that these brainiacs remain oblivious to the consequences of their actions, intent only on pursuing some narrow goal, when five seconds of thought (which apparently is a stretch for many who offer their opinions) would reveal that someone who works closely in any given field is guaranteed to know every consequence of their actions far better than someone who, for instance, gets their knowledge of mutations from comic books.

So let me return to the idea that I stated briefly above. Knowledge itself, regardless of what it is, is not good or bad, and cannot be. The same can be said for people. Only actions are good or bad. The pursuit of knowledge is one of our highest callings as a species, and has resulted in the remarkable standards that we have now – even if you’re not presently reading this on a wireless tablet computer, you certainly can. While some aspects of knowledge can indeed be put to uses we’re embarrassed or horrified over later, this is not a function of the knowledge, but the people making those actions (note, too, how often this is political in nature.) Nobody could possibly have the ability to predict what anyone else might get up to with this, much less everyone. Nor is it even worth considering that some form of knowledge should be avoided or abandoned because it may eventually produce something bad; this can be true of anything. Just because some quaint phrase has been repeated more than once doesn’t mean it has originated with the slightest application of rational thought. Making any decisions, but most especially ethical considerations, on something as feeble as catchphrases or movie tropes is a rather frightening thing for our species to engage in.

Frustrations, part eight: Where is it?

There’s not going to be anything insightful hidden within this post, I’m just writing it out because I’m hoping it will be cathartic.

First things first. I’m actually pretty good about being able to find some place on a map that I’ve been to years before, based largely on the general landscape and my memory of how I’d arrived, and this even applies to little unplanned side trips. It helped create the Google Earth placemarks that are available on several pages of the photo gallery, such as here. And yet, there’s one place I simply cannot nail down, and it’s pissing me off.

In 1999 when I was living in North Carolina, I took a photo trip to Florida. I had overnighted in Gainesville and left very early the next morning to the closest area I could locate on a map that might provide something scenic for sunrise, a small park on the Suwanee river. I remember being in a very rural area, and turning off the main road onto a semi-long drive (perhaps a kilometer in length) before reaching the parking area. By flashlight, I checked out the info kiosk, and followed the footpath to the river overlook – maybe a few hundred meters, certainly not a long hike. There was a raised, railed wooden platform, perhaps the size of a porch, right on the water’s edge yet still largely shaded by the forest. Dawn revealed a quiet stretch of river, perhaps half a kilometer wide, with pads of river weeds floating by, and not a single identifying characteristic other than flowing, as I recall, close to due south at that point. I took two frames, just for the sake of having shot something, and headed back. I chased a couple of insect images (you’re shocked, I can tell,) including a huge grasshopper, but mostly what I recall from the walk back, now that the sun was up, was the number of vast spider webs just above my head that I hadn’t seen earlier. Golden silk spiders (Nephila clavipes) are disturbingly large arachnids that belong in prehistoric movies, and not hanging a meter or two over the path that you’re walking upon.

The other thing that I remember was, on the connection of the park’s access road with the main route, a small field of wildflowers that was besieged by butterflies, where I stopped and shot two rolls of film. It was barely out of the trees, bordering a quiet road that I turned right onto later to head south and reconnect, eventually, with Interstate 75.

Knowing that I would have taken the most direct route from Gainesville to the river, I figure I had to have driven either Florida Route 26 or 24 for most of the side trip there, probably 26, which would indicate I was near Fanning Springs State Park – but that doesn’t look right at all. I wasn’t keeping a journal and there’s nothing in any of the images that provides a clue. While the river overlook deck may not be visible from the air, I still should have been able to find the park by the nature of the roads… but it just ain’t happening.

Edit, several days later: I finally realized that I probably still had the road atlas I’d used for the trip, and dug it out for reference. The only park that was illustrated, and seemed to fit, was Manatee Springs State Park near the town of Manattee Rd, Florida (that’s not a typo.) The locale and parking lot seemed right, but I couldn’t spot the observation deck or the location of the butterfly field. Obsessed now, I checked the park’s website and began going through the photo gallery. On page two, I found this image, which demonstrates a startling connection when it is superimposed on my own image, seen below. Pay particular attention to the background treeline:

So, my memory was pretty solid, except that the weeds were probably fixed and not floating by, and I’m still not sure about the butterfly field, but I think it might be the little turnoff to the left only a few hundred meters up the access road from the parking lot. Oh, and the river’s only a quarter-klick wide right there. Sue me.

And just as a side note, the last image seen here was taken on the same side trip. Also, the butterfly image on the home page slide show was taken in that field. I try to make the most of my photo trips ;-)

But yeah, I feel better now.