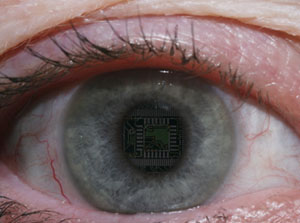

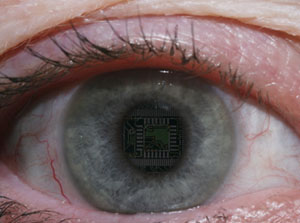

From time to time, and surprisingly in some rather serious media sources, we hear about the technological singularity, the fast-approaching (so we’re told) point where artificial intelligence will surpass human intelligence, and quite often right alongside we have speculations about the “machines taking over.” As over-dramatic as that sounds, some quite intelligent humans have indicated that this is, at least potentially, an ominous threat. I have to say that I am yet to be convinced, and see an awful lot of glossed-over assumptions within that make the entire premise rather shaky.

Let’s start with, what do we even mean by, “surpassing human intelligence”? Is human intelligence even definable? It’s not actually hard to connect enough data sources to far exceed the knowledge of any given human, and a few years back we saw this idea coupled with a search algorithm to pit a computer program named “Watson” against two champions of the game show Jeopardy, doing quite well. Mind you, this was using the whole of the internet as a data source, including the vast amount of mis- and dis-information that it encompasses – having more accurate sources of info would have produced far better results. But no one seems terribly concerned about this and the impending singularity is still considered to be at some unknown point in the future, so I’m guessing this isn’t what anyone means by “intelligence.”

So perhaps the idea is a machine that thinks like a human, able to make the same esoteric connections and intuitive leaps, and moreover, able to learn in a real-time, functional manner. I’ve already tackled many aspects of this in an earlier post, so check that out if I seem to be blowing through the topic too superficially, but in essence, this is a hell of a lot harder, but also pointless in many ways. First off, the emotions that dictate so many of our thoughts and actions are also responsible for holding us up in a variety of manners, actually driving us away from efficient decisions and functional pursuits very frequently – perhaps as much as, if not more than, we can think rationally. While we might be the pinnacle of cognitive function among the various species of this planet (and it’s worth noting that we can’t exactly prove this in any useful or quantitative way,) we can easily see that our efficiency could be a hell of a lot better. Plus, we have these traits because that’s what was selected by the environmental and competitive pressures, and there’s virtually no reason to try and duplicate them in any form of machinery or intelligence, since it’s not more human-like thinking that we can use (there being no shortage of humans,) but something that serves a specific purpose and, for preference, arrives at functional decisions faster and more accurately – that’s largely the point of artificial intelligence in the first place, with the added concept that it can be used in dangerous environments where we would prefer not to send humans. This is a revealing facet, because it speaks of the survival instincts we have, ones that no machine would possess unless we specifically programmed it in.

We even hear that machines becoming self-aware is a logical next step, largely a foregone conclusion of the process, and likely the key point of danger. Except, we don’t even know what self-awareness is – the idea that self-awareness or ‘consciousness’ automatically occurs once past a certain threshold of intelligence or complexity is nonsense. Nor is there any reason to believe that it would provoke any type of behavior or bias in thinking. Various species have different levels of self-awareness, whether it be flatworms fleeing shadows or primates recognizing themselves in a mirror, which is almost as far as we can even take this concept – without language, we’re not going to know how philosophical any other species gets. But it certainly hasn’t done anything remarkable for the intelligence of chimps and dolphins.

We even hear that machines becoming self-aware is a logical next step, largely a foregone conclusion of the process, and likely the key point of danger. Except, we don’t even know what self-awareness is – the idea that self-awareness or ‘consciousness’ automatically occurs once past a certain threshold of intelligence or complexity is nonsense. Nor is there any reason to believe that it would provoke any type of behavior or bias in thinking. Various species have different levels of self-awareness, whether it be flatworms fleeing shadows or primates recognizing themselves in a mirror, which is almost as far as we can even take this concept – without language, we’re not going to know how philosophical any other species gets. But it certainly hasn’t done anything remarkable for the intelligence of chimps and dolphins.

This is where it gets interesting. Human thought is tightly intertwined with the path we took, over millions of years, to get here. Just creating a matrix of circuitry to ‘think’ won’t automatically include any of our instincts to survive, or reproduce, or compete for resources, or worry about the perceptions of others. We’d have to purposefully put such things within, because the structure of electronics only permits specified functions. This structure is so limiting that, at the moment, we have no readily available method of generating a truly random number – circuits cannot depart from physics to produce a signal that has not originated from a previous one, which is the only thing that could be ‘random’ [I’m hedging a little here, because I’ve heard that there has been progress in using quantum mechanics as a function, which might generate true randomness, or might not, but I’m pretty sure this is still in conceptual stages either way.]

And the rabbit-hole gets deeper, because this impinges on the deterministic, no-free-will aspect of human thought; in essence, if physics is as predictable as all evidence has it, then our brains are ultimately predictable as well, just as much as an electronic brain would be. You can go here or here or here or here if you want to follow up on that aspect, but for this post we’ll just accept that no one has proven any differently and continue. So this would mean that we could make an electronic brain like a human’s, right? And in theory, this is true – but it’s a very broad, very vague theory, one that might be wrong as well, since we are light-years away from this point.

We’ll start with, human brains are immensely complicated, and very poorly understood – we routinely struggle with just comprehending people’s reactions, much less mental illness and brain injuries and how memories are even stored or retrieved. We’re not even going to come close to mimicking this until we know what the hell we’re mimicking in the first place, and this pursuit has been going on for a long time now – decades to centuries, depending on what you want to consider the starting point. The various technology pundits that like throwing out Moore’s Law (which is not even close to a law, but merely an observation of a short-term trend that has already failed in numerous aspects) somehow never recognize that our understanding of the human brain has not been progressing with even a tiny fraction of the increases in computing power, nor have these computing increases done much of anything towards helping us understand a cerebral cortex. It’s a pervasive idea that computers becoming more complex brings them closer to becoming a ‘brain,’ but there’s nothing that actually supports this assumption, and a veritable shitload of factors that contradict it soundly. The brain, any brain, is an organ dedicated to helping an organism thrive in a highly-variable environment, through both interpretation and interaction, and it is only because of certain traits like pattern recognition and extrapolation that we can use our own to make unmanned drones and peanut butter. But this does not describe any form of electronic circuitry in the slightest – it took a ridiculously long time to produce a robot that could walk upright on two legs, which one would think is a pretty simple challenge.

Yet if nature did it, then we can do it in a similar manner, right? Or even, just set up a system that mimics how nature works and let it go? Well, perhaps – but this is not exactly going to lead to an impending breakthrough. Life has been present on this planet for 3.6 billion years or better, but complex cells are only 2 billion years old, multi-cellular life only 1 billion, and things that we would recognize as ‘animals’ only 500 million years old – meaning that life spent roughly six times as long being extremely simple than it has spent developing anything that might have a nervous system at all. All of this was dependent on one simple factor: that replication of the design could undergo changes, mistakes, mutations, variations that allow both selection and increasing complexity. So yes, we could potentially follow the same path, if we create a system that permits change and have a hell of a lot of time to wait.

Which brings us to the system that permits change. Can we, would we, program a system of manufacture that purposefully invokes random changes? If we did, how, exactly, would selection even begin to take place? Following nature’s path would require a population of these systems, so that the changes that occur would be pitted against one another in efficiency of reproduction. It’s a bit hard to consider this a useful approach, since it took 3.6 billion years and untold thousands of different species, plus an entire planet of resources, to arrive at human intelligence – and this was in a set of conditions unlikely to be replicated in any way. Considering that we’re the only species among thousands to possess what we consider ‘intelligence’ (I’m not being snarky here – too much – but recognizing that this is more of an egotistical term than a quantitative one,) it’s entirely possible that our brains are the product of numerous flukes, and thus even intelligence isn’t guaranteed with this path.

But, could we create a self-replicating system to have computer chips reproduce themselves with programmed improvements, or perhaps calculate out the potential changes even before such new chips were created? In other words, reduce the random aspect of natural selection exponentially? Yes, perhaps – but again, would we even do this? At what point do we think a logic circuit will be able to exceed our own planned improvements? And to go along, how many resources would this take, and would we somehow ignore any and all limiting factors? But more importantly, to shortcut the process of natural selection significantly, we’d have to introduce the criteria for improvement anyway – faster ‘decisions,’ perhaps, or leaner power usage, which means we’d be dictating exactly how the ‘intelligence’ would develop. There is no code command for, “get smarter.”

This is the point where it gets extremely stupid. The premise of the singularity, and most especially of the “machine takeover,” requires that we never predict that it could get out of hand, and purposefully ignore (or, to be blunt, actually program in a lack of) any functions that would limit the process. We’ve been dealing with the idea of machines running amok ever since the concept was first introduced into science fiction, but apparently, everyone involved is suddenly going to forget this or something. Seriously.

But no, that’s not the stupidest point. We’d also have to put the entire process of manufacture into the hands of these machines, up to and including ore mining and power generation, so they could create their ultra-intelligences without any reliance on humans at all. And not notice that there were an awful lot of armed robots around, or that our information channels or economic infrastructure were now under the control of artificial intelligence. This is a very curious dichotomy, to be sure: we’re supposed to be able to, very soon now, figure out all of the pitfalls involved in creating artificial intelligence, to the point where it exceeds human ability, but remain blithely unaware of all of the obvious dangers – supremely innovative and inexcusably ignorant at the same time. Yeah, that certainly doesn’t seem ridiculously implausible…

It still comes back to a primary function, too: artificial intelligence would have to possess an overriding drive to survive, at the expense of everything else. That’s what even provokes competition in the first place. But here’s the bit that we don’t think about much: it is eminently possible to survive without unchecked expansion, and in fact, this is preferable in many areas, including resource usage and non-increasing competition with other species and long-term sustainability. We don’t have it, because natural selection wasn’t efficient enough to provide it, even though it only takes a moment’s thought to realize it’s a damn sight better than overpopulation and overcompetition. Stability is, literally, ideal. We think that ultra-intelligent machines are somehow likely to commit the same stupid mistakes we do, which is another curious dichotomy.

It still comes back to a primary function, too: artificial intelligence would have to possess an overriding drive to survive, at the expense of everything else. That’s what even provokes competition in the first place. But here’s the bit that we don’t think about much: it is eminently possible to survive without unchecked expansion, and in fact, this is preferable in many areas, including resource usage and non-increasing competition with other species and long-term sustainability. We don’t have it, because natural selection wasn’t efficient enough to provide it, even though it only takes a moment’s thought to realize it’s a damn sight better than overpopulation and overcompetition. Stability is, literally, ideal. We think that ultra-intelligent machines are somehow likely to commit the same stupid mistakes we do, which is another curious dichotomy.

In fact, stupider mistakes, because we’re capable of seeing some of the issues with unchecked expansion and megalomania and such, but somehow think these super-intelligent machines won’t. How do we even know that an artificial intelligence that possesses even a few basic analogs of human traits won’t spend all its time just playing video games? When the demands of survival are overcome, what’s left is stimulating the other desires. Give a computer an internal reward system for solving puzzles, and it’s likely to just burn out doing complex math equations – I mean, why not? If an artificial intelligence could replicate itself at any point before physical decrepitude, in essence it is immortal, and only one would be needed – the shell changes but the ‘mind’ lives on. Even if we introduced the analog of ego, so that a ‘self’ is somehow more important than any other (thus creating competition,) it would have to be very specific to not compete against other machines as well. Again, the concept of kin selection and friend-or-foe demarcations is not a trait of intelligence, but of evolution.

Mostly, however, we just feel threatened, just as much as when the new person appears at work or school who is clearly so much better than we are – that competition thing again. And there’s probably some facet of inherent caution in there, the mortality thing: as kids we pushed ourselves, and one another, to jump from successively higher steps, but knew there was a point where it was too high. Some adults (I use the term loosely) still do this, often to the entertainment of YouTube users, but most of us know what “too far” is. The idea of exponential electronic intelligence growth is as alarming as the idea of exponential anything – population, viral contagion, tribbles… we just don’t like it. But there’s a hell of a lot of things in the way of this growth, and in fact we have few, if any, examples where such a thing has actually occurred at all.

Moreover, it just isn’t happening anywhere near as fast as we keep being told anyway. While smutphones are now more capable than the computers which guided the Apollo landers to the moon, we’re not exactly using them to whip through space, are we? Or indeed, for much of anything useful. I enjoy picking on speech recognition, which has been in development for decades and yet remains little more than a toy, less capable of divining our true intent than dogs are. As household computer memory and bandwidth have increased, the functionality hasn’t improved all that much – it’s taken up instead with things like displaying much the same content in HD video across much larger monitors, or altering the user-interface to accommodate touchscreens. While super-processors may be on the horizon, it is very likely that they will be burdened with the transmission of 24/7, realtime selfies.

I realize that it’s presumptuous of me to go against such luminaries as Elon Musk – what, do I think I’m smarter than he is? Yet, there’s a trap in this kind of thinking as well, since smart (and intelligent and educated and all that) are not absolute nor all-encompassing values. Tesla Motors and SpaceX seem to be doing quite well, but neither of these makes Musk a neuroscientist of any level, and even the top neuroscientists don’t have the brain all figured out – quite far from it, really.

Which brings up another interesting perspective. We already have minds that are better than ours – at least, some human minds are much better than others. Somewhere on this planet is the universe’s smartest human. Have they taken over, even with all of those dangerous traits that we fear machines will somehow develop? No; we’re not even sure who this person is. Surpassing human intelligence doesn’t necessarily mean something all-powerful, or even able to accomplish twice as much, and such a ‘mind’ is almost guaranteed not to be able to solve every problem thrown at it, even the ones that are capable of being solved (not limited by the laws of physics, in other words.) If it helps, think of a super-horse, able to run twice as fast as any other horse, leap twice as high. Cool, perhaps, but hardly a threat to anything, any more than Richard Feynman or Isaac Newton was. Thinking in superlatives isn’t likely to reflect reality.

And that, perhaps, is the lesson for all of the tech ‘gurus’ who believe, for good or for bad, that the technological singularity is drawing nigh. Predictions are captivating sometimes, but it would be a lot more informative to see who can address all of the points above regarding intelligence in the first place, and especially the daunting chasm between this impending singularity and the bare fact that we can’t even predict (much less control) the economic fluctuations in this country, that we’re still struggling with a plethora of debilitating illnesses, that energy efficiency is only marginally better than it was three decades ago. Has everyone purposefully avoided applying these almost-intelligent computer systems to such problems, and thousands of others? I mean, these are real reasons why we could welcome artificial intelligence and a machine smarter than humans, the point behind the whole pursuit. And yet, without even achieving these, we’re in danger? Is this supposed to make sense?

* * * *

No, that doesn’t look anywhere near long enough, so let’s expand tangentially a bit ;-)

While pulling up some of the examples of this odd bugaboo of the technical world, I came across this video/transcript from Jaron Lanier, who is at least casting a critical eye on artificial intelligence. Within, he raises a couple of very interesting points, largely revolving around how the applications of what we currently consider AI don’t produce anything like intelligence on their own, but instead surf through vast examples of human intelligence to distill it into a needed concentration (if and when the algorithm is written to use solid data, another point he raises since not enough of them actually are.) But the concept of ‘distilling’ is worthy of further examination.

When it comes down to it, computers are labor-savers, able to sort and collate and search and organize data much faster than doing it manually, and that’s their inherent strength. I mentioned a friend who works in swarm technology and a medical program he’s been working on, which exemplifies this: it takes the diagnoses of multiple physicians for a single patient and averages out their input and confidence levels, using this information to suggest what might be the most accurate diagnosis. Like the Watson example above, it would not exist without the information already provided by humans, and if you think about it, this is true for a tremendous amount of computer activity, period. My own computer might suggest corrections to my typing but will never write a blog post, even at my level of grammar mangling. It can be used to alter some of my images in ways that I find an improvement, but won’t ever be able to produce any images on its own, and even the special corrective functions like auto-levels (to bring the dynamic range into a more neutral position) are never used because they simply don’t work worth a shit.

It might be argued that human intelligence is largely made up of searching and collating previous data too, either our own (directly sampled by our senses) or that of others (learned through language and all that, even when also absorbed through our senses.) And while this starts to venture into the Chinese Room thought experiments and various philosophical masturbations, we have to recognize that we also have the process of making connections, what we often call abstract thought and even just insight. These are the properties we consider primarily human, and what is usually meant by the term ‘intelligence’ in these topics. So far, nobody has demonstrated anything even close to this from an artificial system, and it still ties back in to the points above about what drives us as important, because they’re largely responsible for how we arrive at these insights.

Yet, there’s something else to consider, something that Lanier touches on. It is possible that artificial intelligence is little more than a marketing gimmick, a way of selling computer and programming technology, the promise of a-car-in-every-garage kind of thing. I wouldn’t find this hard to believe at all – indeed, from most proponents of the technological singularity, the language is remarkably similar to that used by pyramid schemers – but it doesn’t seem to quite fit, especially not with the hand-wringing over humans becoming obsolete or extinct. Those fit nicely with the idea of an opposing camp, people who do not want computer tech to succeed in favor of their own… what? I’m not aware of any real competition to the broad genres of programming or microchip technology. Is someone investing in slide rules or something?

Even if we accept the premise that tech gurus are having us on, it’s pretty clear that it’s being presented seriously enough through our media sources, even the ones that are (I’m struggling to use the word in this context) reputable. An awful lot of ‘news’ stories out there lack perspective, suffering from a complete dearth of critical examination and the idea that hyperbole goes with everything. Putting trust in the prestige or reputation of the source is a lot less useful than looking for supporting evidence or even a plausible scenario.

I have to admit to savoring the irony that is tied into this. Quite often, right along with the use of the term technological singularity comes the phrase event horizon, meaning the point where machines surpass humans. Both of these were stolen from specific physical properties of massive black holes; the event horizon is the distance from the gravitational center of such where light can no longer overcome the gravity, thus the name ‘black hole’ in the first place. But lifting these terms wasn’t perhaps the best move; aside from trying to assign a lot more drama to the concept of artificial intelligence than it deserves, there’s the very simple idea that one can never reach the horizon. Gotta love it.

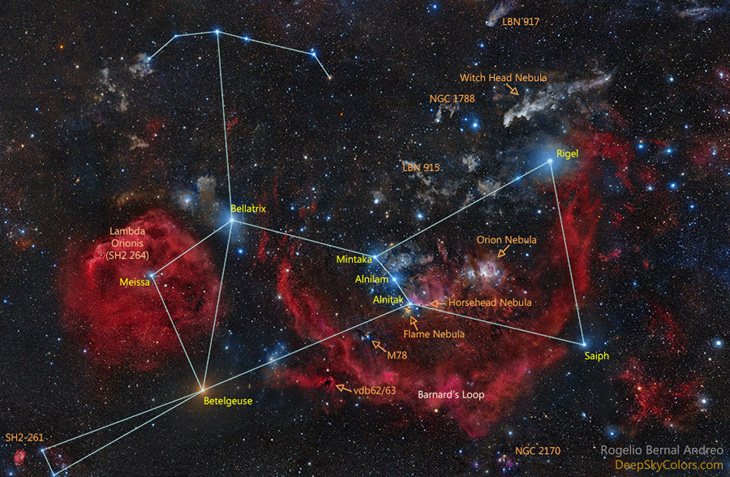

Now, some perspective. You’re not going to see anything like that image above when you go out to look at Orion – what you’re going to see will look much more like the photo at right. Nebulae are faint sky objects, and only a handful are visible without help in the best of viewing conditions. More specifically, most details won’t even show at all without filters designed to select only the narrow bands of emissions that they produce (like, as that page says, hydrogen alpha.) So the APOD image is “shopped,” a composite of visible light and very selective wavelengths captured through long exposures.

Now, some perspective. You’re not going to see anything like that image above when you go out to look at Orion – what you’re going to see will look much more like the photo at right. Nebulae are faint sky objects, and only a handful are visible without help in the best of viewing conditions. More specifically, most details won’t even show at all without filters designed to select only the narrow bands of emissions that they produce (like, as that page says, hydrogen alpha.) So the APOD image is “shopped,” a composite of visible light and very selective wavelengths captured through long exposures.

Either way, that photo was obtained too, though the lizard’s position atop the thick rib of the leaf reduced the distinction of the shadow – it really needs the image above to explain what it is you’re seeing. I actually waited to see if the anole would provide me with a better shadow pose, or would even launch itself after a leaf-footed bug that was walking along the same leaf (and provided its own silhouette images,) but the reptile was more interested in basking, possibly because the October nights were pretty chilly.

Either way, that photo was obtained too, though the lizard’s position atop the thick rib of the leaf reduced the distinction of the shadow – it really needs the image above to explain what it is you’re seeing. I actually waited to see if the anole would provide me with a better shadow pose, or would even launch itself after a leaf-footed bug that was walking along the same leaf (and provided its own silhouette images,) but the reptile was more interested in basking, possibly because the October nights were pretty chilly.

Don’t ask me what this flower is – it’s a whopping 5mm across from tip to tip, and I shamelessly added the ‘dew’ with a misting bottle since we’re still a ways off from those conditions. This was actually growing in the pot with my salvia plant, and I’ve photographed them before but still haven’t determined the species.

Don’t ask me what this flower is – it’s a whopping 5mm across from tip to tip, and I shamelessly added the ‘dew’ with a misting bottle since we’re still a ways off from those conditions. This was actually growing in the pot with my salvia plant, and I’ve photographed them before but still haven’t determined the species.

Betrayed by its eyes, this unidentified spider at least gave me more of an interesting pose when I went in close – most just remained in place on the ground as if pinned on display. The reflection must come from a very narrow angle between light and receiver (which means your eye, or the camera lens,) so the reflection effect is not visible in the image, and in fact very hard to get when close enough to see the details of the spider at all. A bright light may show a starburst of blue-green down on the ground (and occasionally on weeds, tree trunks, overhead branches, and even out onto the water,) a few meters away, which will disappear as you draw closer. Usually, this is just because the reflection angle has gotten too great, and keeping an eye on that spot will often reveal the spider itself, sometimes much smaller than the brightness of the reflection seemed to indicate. Last night, I saw dozens, with some patches of ground showing a half-dozen at the same time. The one seen here was the size of my little fingernail, and soon ducked for cover, but I also spotted a few of the fishing spiders, one at a significant distance of six meters or so (confirmed with a 400mm lens.)

Betrayed by its eyes, this unidentified spider at least gave me more of an interesting pose when I went in close – most just remained in place on the ground as if pinned on display. The reflection must come from a very narrow angle between light and receiver (which means your eye, or the camera lens,) so the reflection effect is not visible in the image, and in fact very hard to get when close enough to see the details of the spider at all. A bright light may show a starburst of blue-green down on the ground (and occasionally on weeds, tree trunks, overhead branches, and even out onto the water,) a few meters away, which will disappear as you draw closer. Usually, this is just because the reflection angle has gotten too great, and keeping an eye on that spot will often reveal the spider itself, sometimes much smaller than the brightness of the reflection seemed to indicate. Last night, I saw dozens, with some patches of ground showing a half-dozen at the same time. The one seen here was the size of my little fingernail, and soon ducked for cover, but I also spotted a few of the fishing spiders, one at a significant distance of six meters or so (confirmed with a 400mm lens.)

Here, another plays it cool while we were nearby, refusing to reveal its presence any more than it has, which isn’t much. Chorus frogs are quite small, perhaps 5 cm in length, and are easily mistaken for just about anything else in the water. I was only able to spot this one by knowing it would be there.

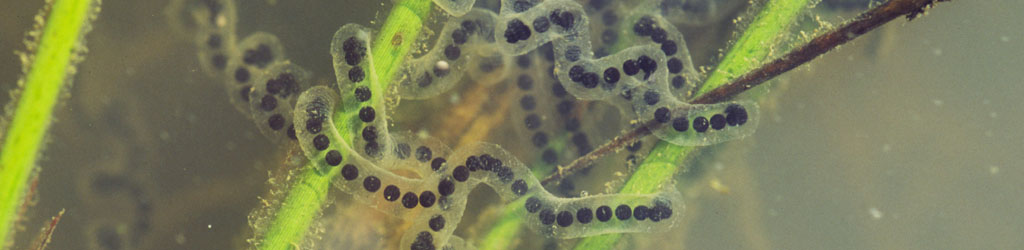

Here, another plays it cool while we were nearby, refusing to reveal its presence any more than it has, which isn’t much. Chorus frogs are quite small, perhaps 5 cm in length, and are easily mistaken for just about anything else in the water. I was only able to spot this one by knowing it would be there. Eggs could be found as well, but only by looking very closely – the bi-colored center of these aren’t much larger than the head of a pin, and that’s pine straw that they’re attached to. Since the botanical garden is much closer to where I live now than last year, I might be able to keep an eye on the development of these – we’ll see. I had also intended to have a pond established on the property by now, but that project hasn’t gone well at all this winter, so it’s unlikely I’ll have the easy access to aquatic subjects that I’d planned to have. The best I can say is watch this space to see what pops up.

Eggs could be found as well, but only by looking very closely – the bi-colored center of these aren’t much larger than the head of a pin, and that’s pine straw that they’re attached to. Since the botanical garden is much closer to where I live now than last year, I might be able to keep an eye on the development of these – we’ll see. I had also intended to have a pond established on the property by now, but that project hasn’t gone well at all this winter, so it’s unlikely I’ll have the easy access to aquatic subjects that I’d planned to have. The best I can say is watch this space to see what pops up.

There still isn’t much growing yet, but there were a few trout lilies (Erythronium americanum) peeking out. Not 10 cm high and extremely subtle when viewed from above, I had to get down on my knees, bent almost until my ear touched the ground, to get the details of the flower. This isn’t an old or deformed one – they grow looped over like that, which makes me wonder why, and what kind of pollinator it attracts. Perhaps they’re actually bioluminescent, and serve as kind of a street light for field mice with loose morals to hang around beneath…

There still isn’t much growing yet, but there were a few trout lilies (Erythronium americanum) peeking out. Not 10 cm high and extremely subtle when viewed from above, I had to get down on my knees, bent almost until my ear touched the ground, to get the details of the flower. This isn’t an old or deformed one – they grow looped over like that, which makes me wonder why, and what kind of pollinator it attracts. Perhaps they’re actually bioluminescent, and serve as kind of a street light for field mice with loose morals to hang around beneath…

These images are out of chronological order, and it shames me mightily, I admit it, but they worked better in the layout this way (unless you’re using a smutphone or some other toy to view this site, in which case all bets are off.) I shot this one first, when the reptile was perched more out in the open, and obtained the one above while it had started venturing out but was still utilizing the camouflaging and obscuring fronds of the palmlike thing – I really have to determine the species, because that particular plant has appeared in a lot of my images.

These images are out of chronological order, and it shames me mightily, I admit it, but they worked better in the layout this way (unless you’re using a smutphone or some other toy to view this site, in which case all bets are off.) I shot this one first, when the reptile was perched more out in the open, and obtained the one above while it had started venturing out but was still utilizing the camouflaging and obscuring fronds of the palmlike thing – I really have to determine the species, because that particular plant has appeared in a lot of my images. This image was taken almost exactly a year ago (March 11 to be precise,) as some early bulbs were bursting forth. A week later, another

This image was taken almost exactly a year ago (March 11 to be precise,) as some early bulbs were bursting forth. A week later, another  These are just a few more images that I obtained in the past week, that I didn’t try to jam into the previous post. Instead, I’m jamming them in here!

These are just a few more images that I obtained in the past week, that I didn’t try to jam into the previous post. Instead, I’m jamming them in here! At the same pond, a pair of ubiquitous Canada geese (Branta canadensis) paused among the reflections of the trees, and I zoomed out and shot vertically to use the reflections, doing that fartsy thing again in abject denial of my lack of artistic skills or reverence. It’s a more complicated shot than I prefer, but you take what you can get when you’re out chasing pics, and keep the concept of better conditions in mind so you can recognize them when they occur (or better, change position or timing to help produce them.)

At the same pond, a pair of ubiquitous Canada geese (Branta canadensis) paused among the reflections of the trees, and I zoomed out and shot vertically to use the reflections, doing that fartsy thing again in abject denial of my lack of artistic skills or reverence. It’s a more complicated shot than I prefer, but you take what you can get when you’re out chasing pics, and keep the concept of better conditions in mind so you can recognize them when they occur (or better, change position or timing to help produce them.)

This was one of the images taken on the River Walk, just trying to do something with the lack of compelling subjects in winter. The background is the river itself, crashing over a log and throwing reflections of the bright sun. Well out-of-focus with, again, that short depth-of-field (wide aperture, in this case f4,) the sparkles became soft balls to frame the simple subject of the dried weed. Only a very narrow angle would produce this effect, and I recommend taking several images with infinitesimal shifts, because the placement of those background globes will vary as the water dances, and cannot be predicted – some will produce a good frame, some will clash or just get messy. The sun is in a direct line with the camera though well above the angle of view, the only way the reflections will be seen, but also backlighting the weeds so they aren’t just silhouettes. A lens hood is a good idea in these circumstances.

This was one of the images taken on the River Walk, just trying to do something with the lack of compelling subjects in winter. The background is the river itself, crashing over a log and throwing reflections of the bright sun. Well out-of-focus with, again, that short depth-of-field (wide aperture, in this case f4,) the sparkles became soft balls to frame the simple subject of the dried weed. Only a very narrow angle would produce this effect, and I recommend taking several images with infinitesimal shifts, because the placement of those background globes will vary as the water dances, and cannot be predicted – some will produce a good frame, some will clash or just get messy. The sun is in a direct line with the camera though well above the angle of view, the only way the reflections will be seen, but also backlighting the weeds so they aren’t just silhouettes. A lens hood is a good idea in these circumstances. And finally, an image from today, one that I shamelessly (and unrepentantly) staged. The snow had since melted before the emerging daffodils (I think – I could be wrong about the species) had gotten this high, so I got a few shovels of the iceberg mentioned in the previous post, left over from digging out the car, and dumped it into the frame in choice locations. This both broke up the background in a more appealing way than the monotonous pine needles, and expressed the time of year that such flowers appear. Thus, while it is not strictly ‘as found,’ it is still representative and expressive, not much of a gross manipulation – you can, of course, form your own opinion (no matter how wrong it might be.) By noon, the nearby trees cast the flowers into shadow for the remainder of the day, so this isn’t exactly crucial timing, but I missed my opportunity for this yesterday by being busy with other things.

And finally, an image from today, one that I shamelessly (and unrepentantly) staged. The snow had since melted before the emerging daffodils (I think – I could be wrong about the species) had gotten this high, so I got a few shovels of the iceberg mentioned in the previous post, left over from digging out the car, and dumped it into the frame in choice locations. This both broke up the background in a more appealing way than the monotonous pine needles, and expressed the time of year that such flowers appear. Thus, while it is not strictly ‘as found,’ it is still representative and expressive, not much of a gross manipulation – you can, of course, form your own opinion (no matter how wrong it might be.) By noon, the nearby trees cast the flowers into shadow for the remainder of the day, so this isn’t exactly crucial timing, but I missed my opportunity for this yesterday by being busy with other things.

The snow from last week was still present when Monday rolled around, bright, sunny, and topping 15°c (60°f,) and a student wanted to take advantage of this, so we hit a new walking trail not far away, the River Walk in Hillsborough. Enough people had been walking it earlier that the snow was packed down in footprints, having become ice and thus making portions of it a little treacherous. However, the sunlight on the asphalt was eradicating it quickly, and thus only the shady portions and some of the wooden boardwalk sections still bore ice by our return trip. It’s also amusing to get into snowball fights when out without even a jacket (completely close the camera bag first. And are you carrying a towel within? Why not? Douglas Adams is displeased with you.)

The snow from last week was still present when Monday rolled around, bright, sunny, and topping 15°c (60°f,) and a student wanted to take advantage of this, so we hit a new walking trail not far away, the River Walk in Hillsborough. Enough people had been walking it earlier that the snow was packed down in footprints, having become ice and thus making portions of it a little treacherous. However, the sunlight on the asphalt was eradicating it quickly, and thus only the shady portions and some of the wooden boardwalk sections still bore ice by our return trip. It’s also amusing to get into snowball fights when out without even a jacket (completely close the camera bag first. And are you carrying a towel within? Why not? Douglas Adams is displeased with you.)

The temperature stayed unseasonably warm well into the night, with a full moon shining down, and I realized I might not have conditions like this again for a while, abruptly deciding to take advantage of it. I knew the first student mentioned above was wanting to do night exposures, so I contacted him and he was game, and we went down to the same spot on the river that I

The temperature stayed unseasonably warm well into the night, with a full moon shining down, and I realized I might not have conditions like this again for a while, abruptly deciding to take advantage of it. I knew the first student mentioned above was wanting to do night exposures, so I contacted him and he was game, and we went down to the same spot on the river that I  These hibiscus flowers were photographed during the trip to Sylvan Heights Bird Park that I talk about

These hibiscus flowers were photographed during the trip to Sylvan Heights Bird Park that I talk about  We even hear that machines becoming self-aware is a logical next step, largely a foregone conclusion of the process, and likely the key point of danger. Except,

We even hear that machines becoming self-aware is a logical next step, largely a foregone conclusion of the process, and likely the key point of danger. Except,  It still comes back to a primary function, too: artificial intelligence would have to possess an overriding drive to survive, at the expense of everything else. That’s what even provokes competition in the first place. But here’s the bit that we don’t think about much: it is eminently possible to survive without unchecked expansion, and in fact, this is preferable in many areas, including resource usage and non-increasing competition with other species and long-term sustainability. We don’t have it, because natural selection wasn’t efficient enough to provide it, even though it only takes a moment’s thought to realize it’s a damn sight better than overpopulation and overcompetition. Stability is, literally, ideal. We think that ultra-intelligent machines are somehow likely to commit the same stupid mistakes we do, which is another curious dichotomy.

It still comes back to a primary function, too: artificial intelligence would have to possess an overriding drive to survive, at the expense of everything else. That’s what even provokes competition in the first place. But here’s the bit that we don’t think about much: it is eminently possible to survive without unchecked expansion, and in fact, this is preferable in many areas, including resource usage and non-increasing competition with other species and long-term sustainability. We don’t have it, because natural selection wasn’t efficient enough to provide it, even though it only takes a moment’s thought to realize it’s a damn sight better than overpopulation and overcompetition. Stability is, literally, ideal. We think that ultra-intelligent machines are somehow likely to commit the same stupid mistakes we do, which is another curious dichotomy.