I find that I’ve managed to limit myself by choosing the “But how?” classification for posts of this nature, because not everything that I want to address fits into that question format very well. So just chalk it up to poetic license (or poor planning) when I fudge the structure a little bit, like now, when we examine How come atheists are so mean?

The biggest question, of course, is whether atheists really are mean, or noticeably meaner than, say, religious folk, baristas, or WalMart shoppers – I think it’s safe to say everyone is mean at one time or another. Getting an objective measure of how mean atheists are as a body would be exceptionally difficult, since ‘mean’ is a value judgment and subjective anyway – we’ll be coming back to this. Plus, since atheism is a standpoint, and has nothing to do with rules of behavior, or member requirements, or temperament or lifestyle or diet or shoe size, there is little likelihood that the trait can shown to exist in any form. By a wide margin, the only time most people even know they’re speaking to an atheist is when the topic of discussion is the less-savory practices of religion; understandably, this is going to skew the impression a bit.

But let’s take this example. Are atheists particularly mean in their approaches within such discussions? There are two ways in which we could demonstrate that this is a potentially warranted conclusion: 1) count up all of the responses from atheists, or at least those considered likely to be atheists, and 2) determine if the majority of the points made by those show an unreasonable amount of animosity. Yeah, that’s likely to be a mess, and again, very subjective, but at the very least this starts carrying us away from noting only the responses that seem most abusive. Far too many people operate on the principle that “I read this nasty comment, and it was by an atheist, therefore atheists are mean.” By that same standard, all YouTube users are vacuous bigots and all online gamers are homophobic – and all religious folk are creationists. Labels are an easy way to avoid the strenuous activity of thinking.

Even then, we won’t have a very good number to work with, because not every atheist even reading the discussions is commenting. By nature, the calmer ones wouldn’t even bother commenting, and perhaps, the nastier ones broke their keyboard in fury before their submission posted. There really isn’t a useful way to obtain a number that will work.

When it comes to forms of media, is it easy to pick out the atheists because of their abrasive attitudes? Are they noticeably more nasty than, for instance, Republicans, or vegans, or feminists, or sports fans? Do the number of epithets, and derogatory terms, and personal attacks, and outright lies from atheists outnumber those from any other classification, those named or otherwise? If we look at what passes for political ‘discourse,’ in this country at least, we see a hell of a lot of petulance, bias, dishonesty, and outright hostility – yet no one is wringing their hands in despair over this state of affairs, are they? I hope I’ve made a point that being mean is remarkably common, which doesn’t excuse such behavior, from atheists or anyone else, but does mean that selecting any one as standing out in this regard falls somewhere between ridiculously biased and laughably naïve.

Very likely, a lot of the impression also has to do with internet memes, and people being influenced by what’s popular – the phrase “shrill and strident” is now a joke among most outspoken atheists after the number of times it has been applied, in total seriousness, to people like Richard Dawkins and Christopher Hitchens, neither of which can even remotely be considered “shrill,” and strident is none too apt either. Curiously, “strident” gets so little use otherwise that its appearance in such discussions is very likely to be just mindless repetition, and a certain number of those using it couldn’t even define it usefully. Just like movie reviews can warp someone’s impression of a film, hearing a pointed descriptive phrase can cause a lot of people to find those qualities above all else; advertisers have known this for well over a century. Repetition plays a part as well, and even a curious trait brought to new heights by online socialization: the bandwagon. Bacon, for instance, is considered fairly tasty by many, but I doubt anyone believes it outranks other foods by such a huge margin that we need bacon soap, bacon breath mints, and bacon-wrapped scallops. Wait, hold on – we do need that last one…

But this gives an indication of something that might be at work (I’m trying to be very objective here, because there’s little doubt in my mind,) and that’s something we could call “defensive hyperbole.” We all get defensive when our viewpoint is challenged, and there’s certainly a tendency to exaggerate the attack, even by considering it an attack in the first place. Simple disagreements turn into arguments quickly, with counter-accusations and personal remarks appearing frequently – people can be notoriously incapable of reading/hearing only what’s been said without ‘reading between the lines.’

A couple of things magnify this defensiveness, as well. Religion is frequently wielded as an indicator of status, an actual label that says, ‘good,’ and when this is questioned in any way, people often consider it a personal affront; if religion is being called bad, then I’m being called bad. Sometimes this really is the message expressed by some particular atheist, but not always, and not often in my experience. As a personal example, I consider creation stories to be entirely mythical, and much of the moral guidance therein to be appalling, but I don’t consider anyone following religion to be automatically bad, just mistaken (to varying degrees too, since no one that I’ve ever seen follows their own religion strictly.) Actions can be bad; people are just people. The other factor that magnifies the defensiveness is the in-group influence – the peculiar idea that if a lot of people believe so-and-so is true, then it must be true, something that a ridiculous number of people fall for. Churches operate on this principle constantly – a church is nothing but an in-group, really – and religions usually make a big show of their presence in countless ways to take advantage of this human trait. So when any religious behavior is questioned or denigrated, the attack is against ‘me and everyone I know,’ as well as openly challenging this method of surety at the root. That’s pretty rude, isn’t it?

Most of this might be considered making excuses, which isn’t the point at all. I’ve seen enough examples of meanness, as well as being so myself, to know it exists. But this doesn’t prove that it’s a defining trait of atheists, and I hope I’m showing that objective examination is what any honest person should engage in. Indeed, in such discussions objectivity has been requested countless times from religious folk, concerned that they were being lumped in with the extremist flakes, so I would think extending the same courtesy would be considered fair.

Let’s take a look at fair, though. The topics of discussion when it comes to religion are often quite contentious: censorship, restrictive legislation, selective and questionable education, bigotry, classism, and even ridiculous medical practices and physical attacks – gay beatings, murder of abortion clinic doctors, beheading infidels – the list is not pretty. If anyone tries to deny religions are deeply involved in all of these, and many more besides, they will not like how strong my response is – it’s the nature not just of disagreement, but of demonstrating how much one disagrees and, to no small extent, how disreputable anyone might be for trying to ignore such behavior. This is part of the social contract; we, all humans, have to define what’s acceptable and what’s not, and there are a lot of ways to define the level of unacceptability. A police officer could kindly, quietly, say, “Put down the weapon, please” – it would be mean to raise their voice, right? C’mon. Tone is as important, if not more so, than content in communication, and there are many levels of disagreement or disapproval. When we look at that list above, they’re all unacceptable, but some much more so than others.

Tied into this is an outright blindness to position-swapping. Many, probably most, religious folk defend their faith as a personal choice – they’re not required to make sense, nor have to convince anyone else, since it’s their prerogative to choose what they want, which is fine, actually; no one could take it away anyway. But none of those behaviors listed above, the ones that arise in discussions regarding religion constantly, are expressions of personal choice in the slightest; they’re expressions of authority, privilege, and elitism, the sudden switch from personal choice to a superiority complex, and the belief that their faith is what everyone else should obey. Parents may consider public school curricula to be damaging to their child, which is an opinion, however misguided, that they’re entitled to. They’re not entitled to dictate what anyone else’s child should or should not receive. They may be openly disgusted over the idea of same-sex marriage, which is fine – don’t get married to the same sex, then. Others have their own opinions, believe it or not – opinions are like that. But when it is pointed out that religious folk have no authority to dictate to others, that there are even laws against trying to peddle such influences, abruptly the position swaps back and the whining starts that someone is trying to take away their personal liberties again. This isn’t any form of reasoned discussion, and does not deserve to be treated as such.

If we examine any action by itself, not associated with any worldview or belief system, it’s often not too hard to view it objectively, judging its value solely on what it accomplishes. But when it is attached to a larger idea as part and parcel, then objectivity often vanishes as it’s absorbed into the attitude towards the entire system. Nobody would ever say, “I let my children play in the street because I’m from Finland” – that makes no particular sense – but people do say, “I won’t provide my child experienced medical attention because it’s against my religion,” and in some places there are laws that permit this, even though it makes no more sense. Very often, religious folk expect a deference to their belief system, as if it’s been established as rational and/or beneficial, so of course pointing out how harmful various practices are is contrasted against this expectation. There’s no reason whatsoever that someone’s personal worldview should be allowed to place a child in mortal jeopardy, but idiots in times past have permitted this kind of nonsense, and now anyone who dares to inject sense into the discussion is somehow being cruel.

And let’s not ignore another simple factor: that accusations of tone or impropriety are often just misdirection; they have nothing to do with the content of the commentary, the points made, or any attempt to address them, and it’s safe to say that a certain percentage of time, it’s purposeful avoidance of a discussion that isn’t going to turn out well. When someone cannot respond usefully to the point, they frequently resort to changing the subject or going on the attack. In fact, if anyone bothers to examine the religious responses to scientific articles that establish evolution, the inaccuracy of scripture, or the abusive standards of particular sects, they’ll rarely see points of rebuttal; instead, by a wide margin, come the protests of impropriety – bookended, of course, by assertive mantras of holy truth. It’s unfortunate when we fall for it, as if criticism is somehow socially unlawful.

Finally, we get down to the comparison, which can be described as nothing short of hulking hypocrisy. Atheism is routinely associated with satanism, nihilism, hedonism, immorality, and a host of other disreputable epithets – issued by no one other than fine, upstanding, kind religious folk. When there is any form of public display regarding even talks by atheists, all the way up to billboards advancing secularism, the vandalism appears almost immediately, imagine that. The sciences, as noted above, routinely come under attack, to the point where pressure is constantly applied not to teach many aspects in schools, even thought they’ve proven their worth far more than any religious text. Legislation to prohibit same-sex marriage is not promoted by mellow, kindly people, and the placards carried by religious demonstrators cannot charitably be called, “pleasant.” Virtually anything that kids find entertaining, from board games to books and movies, routinely receives fierce accusations of heinous content or practices, so much so that I may start a new site highlighting these, since I will never run out of content. And of course, any prominent atheist will receive countless e-mails and letters assuring them that they will be tormented for all eternity. Pointing out that atheists find the concept ludicrous is missing the point; the upstanding souls that make such avowals believe it wholeheartedly, or at least claim to. You’ll pardon me if I dare express my opinion that all this behavior is not just mean, but vindictive and neurotic as well. These are not happy, well-adjusted people – not by any measure. Nor is there anyone leaping in to decry these tactics for the sake of polite discourse.

Is this representative of all religious folk? No. I’m more than happy to maintain an objective attitude and believe that it’s the lunatic fringe, avoiding the application of simplistic labels intended only to strengthen my arguments. And I would hope for the same perspective in return.

It’s hard to do artistic insect photography, or at least for me to do it, though this is an attempt. Most times I aim for detail images, or behavior, and let’s face it, the market for gallery prints of bugs, especially spiders, is rather limited. But I like to believe the position manages to change the spider from menacing to almost-shy, with the leaf dominating the frame and the spider relegated to a corner, as it were – certainly the “ready-to-pounce” posture is almost obscured by this angle. There’s also enough leaf detail to imply scale a bit better than many of my images, conveying that this really is a small specimen.

It’s hard to do artistic insect photography, or at least for me to do it, though this is an attempt. Most times I aim for detail images, or behavior, and let’s face it, the market for gallery prints of bugs, especially spiders, is rather limited. But I like to believe the position manages to change the spider from menacing to almost-shy, with the leaf dominating the frame and the spider relegated to a corner, as it were – certainly the “ready-to-pounce” posture is almost obscured by this angle. There’s also enough leaf detail to imply scale a bit better than many of my images, conveying that this really is a small specimen.

As the caterpillars grew larger, they tackled the leaves in a different manner, making a more noticeable dent in the foliage. Their numbers dwindled, however, likely due to predation but I never witnessed what was responsible; all I know is only a handful made it to chrysalis stage, and I never got to see those hatch out either (this is largely why these images remained in the blog folder unused – I try to build a story when I can.) When you’re this close, by the way, it’s easy to actually watch their progress through the leaf, and after only a few minutes you get the impression they should be stuffed to the gills by now.

As the caterpillars grew larger, they tackled the leaves in a different manner, making a more noticeable dent in the foliage. Their numbers dwindled, however, likely due to predation but I never witnessed what was responsible; all I know is only a handful made it to chrysalis stage, and I never got to see those hatch out either (this is largely why these images remained in the blog folder unused – I try to build a story when I can.) When you’re this close, by the way, it’s easy to actually watch their progress through the leaf, and after only a few minutes you get the impression they should be stuffed to the gills by now.

One of the lynx spiders that I followed all year put on this display one evening, and illustrated a curious trait, which I’ll get to in a minute. Some arachnids, like the fishing spiders, split their exoskeletons horizontally along the sides and flip the top portion out of the way, while the lynx spiders (and mantids) split longitudinally along the ‘spine’ and exit that way. This specimen is also hanging from its abdomen, and both this one and the mantis remained largely motionless for a while, despite the fact that I was obviously nearby; I suppose that it takes time for them to feel confident in the hardness of their new chitin, and in the meantime it is better to be still and not attract attention. But after a while, this one (a female) stretched out and grabbed the network of web strands that only makes a faint appearance in these images, and detached herself from the molted skin.

One of the lynx spiders that I followed all year put on this display one evening, and illustrated a curious trait, which I’ll get to in a minute. Some arachnids, like the fishing spiders, split their exoskeletons horizontally along the sides and flip the top portion out of the way, while the lynx spiders (and mantids) split longitudinally along the ‘spine’ and exit that way. This specimen is also hanging from its abdomen, and both this one and the mantis remained largely motionless for a while, despite the fact that I was obviously nearby; I suppose that it takes time for them to feel confident in the hardness of their new chitin, and in the meantime it is better to be still and not attract attention. But after a while, this one (a female) stretched out and grabbed the network of web strands that only makes a faint appearance in these images, and detached herself from the molted skin.

Another break, a belted kingfisher (Megaceryle alcyon) perched conspicuously on a dead snag. This was taken while we were on

Another break, a belted kingfisher (Megaceryle alcyon) perched conspicuously on a dead snag. This was taken while we were on

Anyway, a few years ago I had hand-painted a green sea turtle onto a vinyl spare tire cover for The Girlfriend, who is a sea turtle enthusiast. Ostensibly it was a christmas present, but it ended up taking longer than I wanted so she didn’t get it until later. She was not at all displeased over this, however, and has been ensuring that it stays in good condition as long as possible.

Anyway, a few years ago I had hand-painted a green sea turtle onto a vinyl spare tire cover for The Girlfriend, who is a sea turtle enthusiast. Ostensibly it was a christmas present, but it ended up taking longer than I wanted so she didn’t get it until later. She was not at all displeased over this, however, and has been ensuring that it stays in good condition as long as possible. On christmas morning, I sneaked out and mounted the tire cover on her vehicle, then when gift exchange came around, I presented her with a card which hinted vaguely that she should be looking at the spare tire. As we went outside, it was apparent the moment she spotted the cover, from the sudden exclamation, and even more gratifying when she ran her fingers across it and asked incredulously, “You painted this?” I think she’s pleased with it.

On christmas morning, I sneaked out and mounted the tire cover on her vehicle, then when gift exchange came around, I presented her with a card which hinted vaguely that she should be looking at the spare tire. As we went outside, it was apparent the moment she spotted the cover, from the sudden exclamation, and even more gratifying when she ran her fingers across it and asked incredulously, “You painted this?” I think she’s pleased with it. This project actually went a lot smoother than I anticipated. I had a cylinder of white soapstone that I received as a present myself some years back, and the color and shape lent itself to the idea, since cats curl up into balls anyway and tend to mold themselves to their surroundings. I located a couple of images as a guide and sketched out the rough shape, and somehow managed to keep the proportions correct throughout the work (the biggest exception was the left foreleg, which was a little large at first.) Pieces like this are difficult to photograph well enough to see all the details at once – they usually benefit from being able to be turned in the light so the shadows fall differently – but I think you can still faintly make out the curl of the “fists” that marks a happy cat, something Kaylee does frequently and The Girlfriend finds adorable.

This project actually went a lot smoother than I anticipated. I had a cylinder of white soapstone that I received as a present myself some years back, and the color and shape lent itself to the idea, since cats curl up into balls anyway and tend to mold themselves to their surroundings. I located a couple of images as a guide and sketched out the rough shape, and somehow managed to keep the proportions correct throughout the work (the biggest exception was the left foreleg, which was a little large at first.) Pieces like this are difficult to photograph well enough to see all the details at once – they usually benefit from being able to be turned in the light so the shadows fall differently – but I think you can still faintly make out the curl of the “fists” that marks a happy cat, something Kaylee does frequently and The Girlfriend finds adorable. A little later the same day, Kaylee made a spirited attempt to mimic her likeness on the bed, almost exactly where I’d placed the gift earlier – she often covers her nose with her paw, and that was a detail I knew I had to include. She wasn’t cooperative enough to pose like this before I started work on the piece, so I had to cheat and again work from images gathered online.

A little later the same day, Kaylee made a spirited attempt to mimic her likeness on the bed, almost exactly where I’d placed the gift earlier – she often covers her nose with her paw, and that was a detail I knew I had to include. She wasn’t cooperative enough to pose like this before I started work on the piece, so I had to cheat and again work from images gathered online. Anyway, in days gone by I’ve recognized this barely-noticeable event by going back to see what I was photographing during the summer solstice, finding that I had no digital images (the only ones with dependable date stamps) from those particular days anyway – I don’t take photos every day, though some people I know have a hard time believing this. So this time around, I just decided to see what I could find today.

Anyway, in days gone by I’ve recognized this barely-noticeable event by going back to see what I was photographing during the summer solstice, finding that I had no digital images (the only ones with dependable date stamps) from those particular days anyway – I don’t take photos every day, though some people I know have a hard time believing this. So this time around, I just decided to see what I could find today.

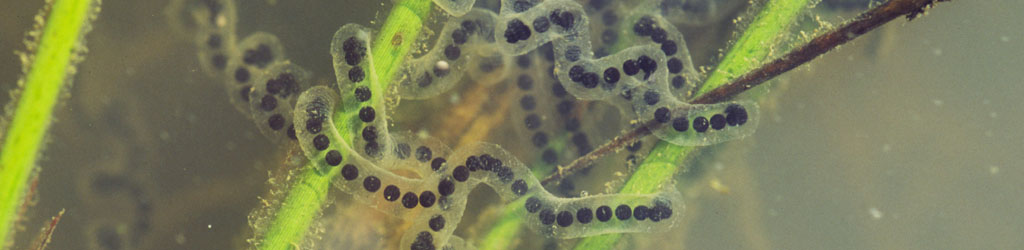

These insects were the subject of my first “

These insects were the subject of my first “

Spurred on by

Spurred on by

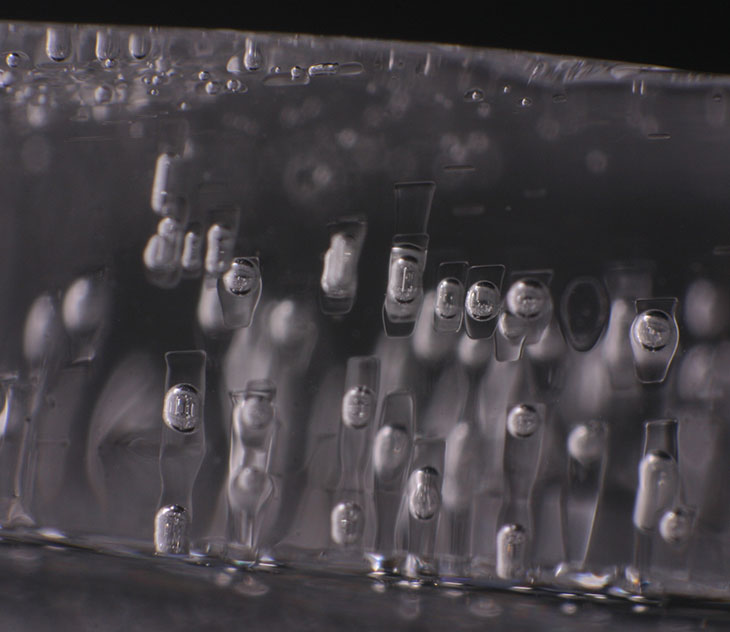

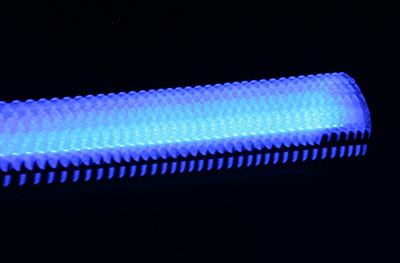

This is an LED christmas light, also taken by panning during a one-second exposure. Alternating current causes all lights to blink, and since LEDs don’t use a filament, they go out immediately rather than fading – you cannot detect this visually, but it’s revealed with a simple camera trick. If you try it with a phone camera you’ll probably get something even weirder, but that’s because phone cameras are goofy. Anyway, Sirius really could go completely black to our eyes, if it does it fast enough (see those gaps in the top image again,) and we might never know it.

This is an LED christmas light, also taken by panning during a one-second exposure. Alternating current causes all lights to blink, and since LEDs don’t use a filament, they go out immediately rather than fading – you cannot detect this visually, but it’s revealed with a simple camera trick. If you try it with a phone camera you’ll probably get something even weirder, but that’s because phone cameras are goofy. Anyway, Sirius really could go completely black to our eyes, if it does it fast enough (see those gaps in the top image again,) and we might never know it.