Not a stunning one this week, but hopefully, one that will produce a follow-up sometime in the near future. I was digging in some soft mulch alongside the back steps and unearthed this:

Definitely an egg, almost certainly of a Carolina anole (Anolis carolinensis) – I’ve seen the eggs of ground skinks before and they’re smaller than this. While I can’t prove that it’s not from a five-lined skink, which make the occasional appearance on the property, we have scads of anoles, so we’re going with that for now.

I decided I’d try to hatch this one out myself, and created a small terrarium/incubator that I could monitor – all they really need is warmth and moisture, and so it has a bed of mulch, but up against the terrarium glass so I can see what’s happening. I’d hatched out some ground skink eggs, many years ago, but never got any photos of them emerging though I was closely observing one that showed the first crack; naturally, in the few minutes that I took a break, the skink emerged at that time. I’m better equipped to tackle such photos now, so hopefully this will come to fruition.

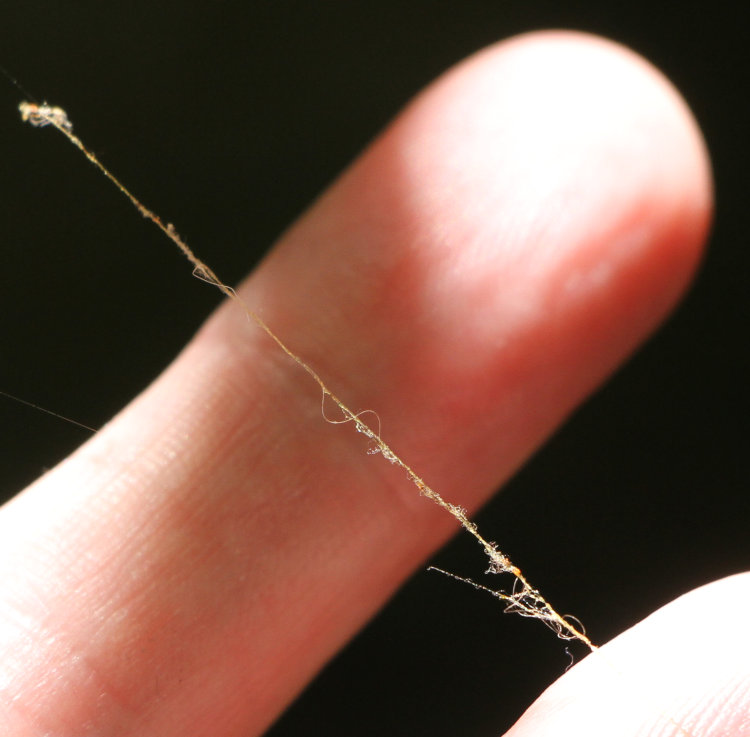

Meanwhile, we also have this, captured early Thursday morning (well after finding the egg):

I’ve seen some portly anoles before, but never one that looked this ready to pop at any moment. She was asleep on the lamp post, and I did a few frames and let her be.

After daybreak, I found one hanging out in almost the same location, but not looking pregnant at all.

While this was taken in the afternoon, I first saw the specimen a couple hours after sunrise, and the proximity to the same location as the pregnant one made me wonder if it was the same, having laid its egg(s) in the interim. Some careful comparison of photos followed, mostly looking at some abrasions/scarring on the shoulders, which seemed the same though I didn’t have photos from the same angles. I was looking at the wrong end, though. Here we have a full-length shot from the night:

And an inset crop of that tail tip:

That’s pretty distinctive, you have to admit, some old injury that healed oddly. And now, a closeup of the tail of the daytime anole:

Yep, that’s a match all right. So I’m surmising that somewhere at the base of the lamp post is her egg stash, and since I know, down to a few hours, when it/they were laid, I can be watching for the emergence of the newborn(s) right on schedule, though whether they’ll show out in the open right away remains to be seen – every one that I’ve found previously gives the impression of being some time after hatching, and I suspect they might stay hidden deep in foliage for the first week or two. The base of the lamp post is all thick liriope, so they’ll have a good spot for it.

The gestation period is five to seven weeks, so I’ll be watching carefully at the end of July. Meanwhile, we have another week before the first turtle nest may start to hatch, and I have four of them to monitor. You’d think with that many, I’d catch the emergence of at least one set of newborn turtles…

* * *

LAST MINUTE ADDITION (Okay, it’s a little less than three hours before this is scheduled to post): While looking for something else in pics already posted this year, I found that this same anole (most likely) has appeared here before. Granted, it’s probably happened quite a bit, I just couldn’t tell for sure because I’m anolist and they all look alike, but this one has another distinctive trait. Check out the lump under the jaw, visible best in this pic but still there in some of the images above:

And now, we go back a few months to before the lamp post had been replaced, but the same location:

I shouldn’t have to tell you this, but yes, they can change color that much, and during the winter months (this was shot in February) they will typically be found in shades of brown because there aren’t that many bright green leaves on hand, plus it likely helps them absorb solar radiation. When I found her during daylight yesterday morning, she was roughly olive in color, which may or may not have been related to laying her egg(s) not long before.

I checked: the images from that photo session in February don’t ever show her tail, so I don’t know if it was injured at that point or not. Regardless, I just had to add this trivial bit.